A Critical Choice: Advocating for Inclusion in Face Recognition Technology

In the rapidly advancing field of face recognition technology, the importance of inclusive systems cannot be overstressed. The stakes are incredibly high, especially for financial institutions and other sensitive sectors. Failure to choose the model with the best demographic performance is not only unethical, but can lead to severe legal, financial, and reputational repercussions.

With the EU AI Act and other similar regulations looming on the horizon, institutions that neglect to address demographic shortcomings in their face recognition systems risk non-compliance and hefty fines. Moreover, discrimination due to poorly performing algorithms have made headlines, exposing organizations to public backlash and litigation.

Organizations cannot afford to be complacent. Imagine the devastating impact of a financial institution using a face recognition system with high error rates for some demographic groups. Incorrect matches could lead to identity theft, wrongful denial of services, or even unlawful detentions. These scenarios are not just hypothetical; they are real risks that could result in significant financial losses and damage to reputation.

Role of NIST in Advocating for Inclusion

The National Institute of Standards and Technology (NIST) plays a pivotal role in combating demographic differentials and advocating for inclusion in face recognition. Through its rigorous Face Recognition Technology Evaluation (FRTE) 1:1 Verification tests, NIST provides an essential standardized framework for assessing the performance of face recognition systems across diverse demographic groups. These evaluations are crucial in identifying and addressing differences in algorithmic accuracy that could lead to biased outcomes. By highlighting the strengths and weaknesses of different technologies, NIST drives the industry towards greater ethical responsibility, ensuring that advancements in face recognition are both fair and inclusive. This commitment not only fosters public trust but also promotes the development of technologies that respect and protect the rights of all individuals, regardless of race, age, or gender.

Understanding Key Metrics in Face Recognition

The NIST FRTE 1:1 Verification includes several important metrics, such as the False Non-Match Rate (FNMR), which measures the rate at which true matches are incorrectly not recognized, and False Match Rate (FMR), which measures the rate at which an individual is incorrectly matched with another identity.

However, when it comes to demographic inclusion, the Maximum False Match Rate (FMR Max) is particularly critical as it measures the highest rate at which the system incorrectly matches two non-identical individuals from within a single demographic cohort (i.e., age, gender, and region of birth). This metric is a crucial indicator of a system’s worst-case performance regarding demographic shortcomings and inclusion. A low FMR Max value is vital because it suggests that the technology is less likely to deliver false positives of any given demographic group. In contexts where face recognition decisions have significant repercussions—such as in sensitive commercial applications like banking, payments, and financial services, as well as digital identity verification, and border control—maintaining a low FMR Max is essential to prevent ethical breaches and uphold the principle of equal treatment under the technology.

By focusing on the FMR Max, stakeholders can gauge the ethical and legal implications of deploying a particular face recognition technology, as it accurately highlights potential areas of improvement for developers to enhance their algorithms, ensuring that advancements in face recognition technology move in tandem with the ideals of fairness and inclusivity. This focus not only aids in regulatory compliance but also builds public trust in how these technologies are applied in real-world scenarios.

Organizational Risks of Demographic Differentials in Face Recognition Systems

Face recognition systems with high demographic differentials pose multiple organizational risks, including:

- Ethical Risk: Face recognition systems with poor performance on a range of demographics can perpetuate discrimination and inequality, undermining an organization’s commitment to ethical practices. The failure to address these biases can lead to systemic injustices, impacting marginalized communities and contradicting corporate social responsibility efforts.

- Customer Experience Risk: Demographic differentials in face recognition systems can negatively impact customer experience, leading to dissatisfaction and loss of loyalty. Customers who experience wrongful denial of services or privacy violations are likely to seek alternatives, reducing customer retention and harming long-term business relationships.

- Financial Risk: Inaccurate face recognition technology can result in significant financial losses. Incorrect matches may lead to fraud, unauthorized access, or wrongful denial of services, causing costly disruptions. Additionally, fines from regulatory bodies due to non-compliance with anti-bias regulations can further strain financial resources.

- Legal Risk: Organizations using poorly performing face recognition systems face increased legal risks. Discriminatory practices can lead to lawsuits, regulatory enforcement, and hefty penalties under laws like the EU AI Act. Legal challenges not only deplete financial resources but also divert focus from core business activities, impacting overall productivity.

- Reputational Risk: The use of non-inclusive face recognition technology can severely damage an organization’s reputation. Public exposure of discriminatory practices can lead to a loss of trust among customers, investors, and the general public. Rebuilding a tarnished reputation requires significant time, effort, and resources, often with no guarantee of full recovery.

Paravision’s Standout Performance

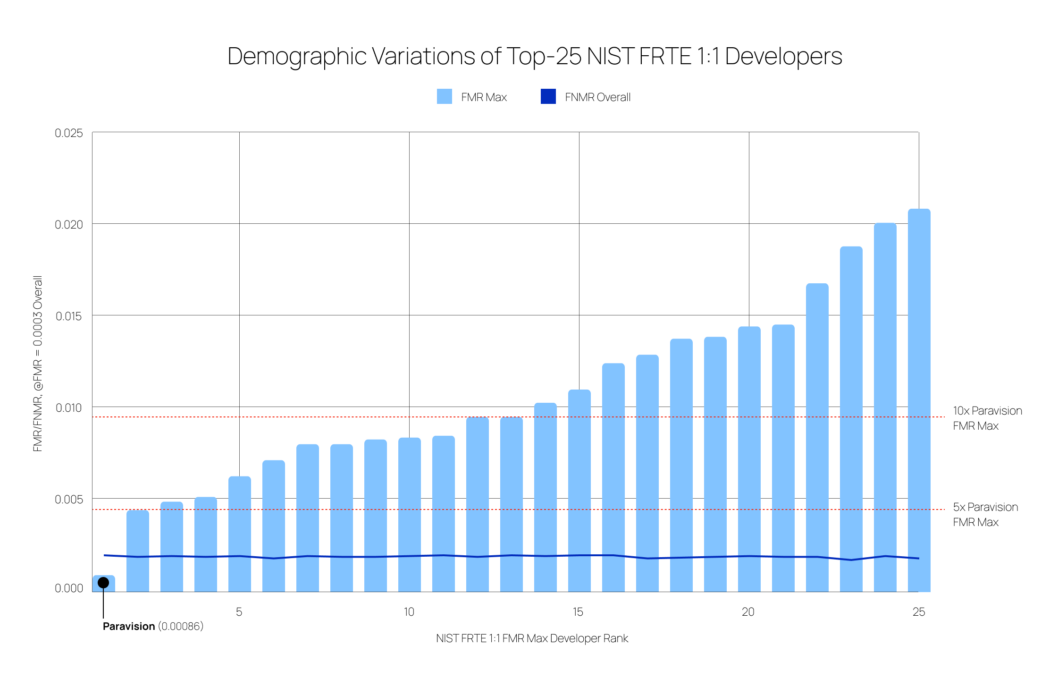

Paravision’s performance in the most recent FRTE 1:1 Verification leaderboard highlights its leadership in developing fair and reliable face recognition technology. Achieving an FMR Max of just 0.00086, Paravision has demonstrated superior performance in minimizing false matches across all demographic groups.

As seen in the table and graph below – when compared to the top-25 in the NIST FRTE 1:1 Verification leaderboard (as ranked by overall FNMR), Paravision’s results are significantly better than any other vendor:

- The next best vendor’s FMR Max is more than five times higher than (i.e., 500% of) Paravision’s.

- The worst-performing vendor in the top 25 has an FMR Max more than 24 times higher than (i.e., 2400% of) Paravision.

- Paravision is one of only three vendors (with Regular Biometrics Solutions and Viante) in the top 25 achieving a FMR Min of 0, meaning that the best performing demographic group has no errors whatsoever.

- Paravision’s FMR Max is found with Eastern European males aged 12-20. In contrast, the worst-case demographic group for all other vendors in the top 25 is Western African females aged 65-99.

| FMR Max Rank | Algorithm | FMR Max | FMR Max vs Paravision | FMR Max Group | FNMR Overall |

| 1 | paravision_013 | 0.00086 | 1.0x | E.Europe M (12-20] | 0.0019 |

| 2 | toshiba_008 | 0.00439 | 5.1x | W.Africa F (65-99] | 0.0017 |

| 3 | rebs_001 | 0.00486 | 5.7x | W.Africa F (65-99] | 0.0018 |

| 4 | stcon_003 | 0.00508 | 5.9x | W.Africa F (65-99] | 0.0017 |

| 5 | kakao_009 | 0.00623 | 7.2x | W.Africa F (65-99] | 0.0018 |

| 6 | cloudwalk_mt_ 007 | 0.0071 | 8.3x | W.Africa F (65-99] | 0.0015 |

| 7 | samsungsds_ 002 | 0.00797 | 9.3x | W.Africa F (65-99] | 0.0018 |

| 8 | psl_012 | 0.00802 | 9.3x | W.Africa F (65-99] | 0.0017 |

| 9 | surrey_003 | 0.00826 | 9.6x | W.Africa F (65-99] | 0.0017 |

| 10 | roc_016 | 0.00831 | 9.7x | W.Africa F (65-99] | 0.0018 |

| 11 | clearviewai_001 | 0.00845 | 9.8x | W.Africa F (65-99] | 0.0019 |

| 12 | cybercore_003 | 0.00947 | 11.0x | W.Africa F (65-99] | 0.0017 |

| 13 | nhn_005 | 0.00951 | 11.1x | W.Africa F (65-99] | 0.0019 |

| 14 | tnitech_000 | 0.01023 | 11.9x | W.Africa F (65-99] | 0.0018 |

| 15 | neurotechno logy_018 | 0.01089 | 12.7x | W.Africa F (65-99] | 0.0019 |

| 16 | vocord_010 | 0.01232 | 14.3x | W.Africa F (65-99] | 0.0019 |

| 17 | qazsmartvision ai_000 | 0.01282 | 14.9x | W.Africa F (65-99] | 0.0015 |

| 18 | viante_000 | 0.01366 | 15.9x | W.Africa F (65-99] | 0.0016 |

| 19 | cmcuni_001 | 0.01381 | 16.1x | W.Africa F (65-99] | 0.0017 |

| 20 | megvii_009 | 0.01441 | 16.8x | W.Africa F (65-99] | 0.0018 |

| 21 | idemia_010 | 0.01449 | 16.8x | W.Africa F (65-99] | 0.0017 |

| 22 | sensetime_008 | 0.01709 | 19.9x | W.Africa F (65-99] | 0.0017 |

| 23 | recognito_001 | 0.01878 | 21.8x | W.Africa F (65-99] | 0.0013 |

| 24 | adera_004 | 0.02001 | 23.3x | W.Africa F (65-99] | 0.0018 |

| 25 | intema_001 | 0.02071 | 24.1x | W.Africa F (65-99] | 0.0015 |

Why This Matters

The significance of a low FMR Max in Paravision’s technology cannot be overstated, especially in high-stakes applications like border security, financial services, identity programs, and government services. Precision is essential, and errors can have severe consequences. While many vendors perform similarly on metrics like FNMR and FMR Min, significant disparities are evident in the FMR Max, highlighting a system’s ability to manage the worst-case scenarios of demographic performance.

Paravision’s achievement in having the lowest FMR Max is a testament to its commitment to our published AI Principles, fairness, inclusivity, and delivering systems that maximize positive outcomes for all. As the industry evolves, the importance of demographic inclusion will increasingly come to the forefront. Choosing a provider with high ethical standards means investing in a future where technology serves everyone equitably, ensuring that the benefits of face recognition technologies are accessible to all.

Paravision’s Approach to Inclusion

While reducing bias is a crucial goal to which Paravision is deeply committed, we believe the ultimate goal should be inclusion through minimizing overall error rates. By striving to eliminate errors altogether, we aim to create a world where our AI solutions empower individuals universally, enhancing access and opportunities without compromising accuracy or fairness. The fence metaphor, often used in Diversity, Equity, and Inclusion discussions, can help illustrate different approaches to inclusion in face recognition systems.

On the left, the fence represents face recognition systems with high demographic differential, meaning they perform better for some groups than others. This approach is biased and creates significant risks for companies.

The middle image shows a system minimizing demographic differentials, with equal performance across groups but with high overall error rates. While fair and equal, these systems create barriers for users and operational risks for companies.

The rightmost image represents Paravision’s approach: removing barriers by reducing error rates across all groups to an absolute minimum. This approach enables excellent performance for all groups, reducing friction and organizational risks.

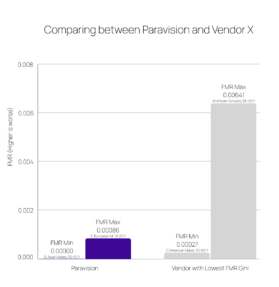

What about FMR Gini?

FMR Gini measures the disparity in FMR across various demographic groups. A Gini coefficient of 0 would mean perfect equality, while a Gini coefficient of 1 would indicate maximum inequality.

In NIST’s 1:1 Demographic Variations FMR leaderboard, the vendor with the lowest FMR Gini has a Gini of 0.38. While this indicates low differences between demographic groups, it’s important to note the vendor’s FMR Max and FMR Min to get a full picture. That same vendor’s overall error rates are significantly higher than Paravision’s as seen in the graph to the right. Although reducing differences between groups is vital for the industry, Paravision believes the goal should be to remove barriers completely and guarantee the lowest possible FMR Max, ensuring good performance for all demographic groups.

Why Does Paravision Deliver Such Low Error Rates Across Demographics?

Paravision is dedicated to creating fair and inclusive face recognition technology. Here are the key ways they advocate for inclusion:

- High-Performing Technology: Paravision’s face recognition systems are designed to be accurate and reliable across all demographic groups. Our advanced algorithms minimize error rates across demographic groups and deliver precise results, even in challenging scenarios, as evidenced by our leading performance in the NIST FRTE tests.

- Company-Wide AI Principles: Our strong AI principles, emphasizing ethical AI development, guide every aspect of our business and technology, ensuring fairness, transparency, and accountability from design to deployment.

- Diverse Team: The diverse team at Paravision is crucial to developing inclusive technology. Incorporating various backgrounds and perspectives enables us to create innovative solutions that consider a wide range of demographic factors, enhancing the fairness and inclusivity of our systems.

- Belief in Benchmarking: We believe in the importance of benchmarking to achieve fairness, and regularly participate in evaluations like the NIST FRTE 1:1 to ensure our technology meets high standards and continuously improves. Benchmarking provides an objective measure of performance, helping us identify and address any potential demographic differentials.

- Genuine Care: Paravision prioritizes the ethical implications of face recognition technology, striving to create solutions that respect and protect all users. This commitment to fairness and inclusivity ensures our technology benefits everyone, delivering equitable outcomes in all applications.

Key Questions to Ask Your Vendor

To mitigate these risks, financial institutions should rigorously vet their face recognition technology providers. Here are crucial questions to pose:

- Whose face recognition algorithm do you use? Is it your own, or a third party? If the latter, whose is it?

- Has that algorithm been benchmarked on NIST FRTE 1:1?

- Has that algorithm been benchmarked for demographic performance within that test?

- What is your worst-case FMR Max and on what population?

- How do you advocate for inclusion in Face Recognition internally?

Final Words

Financial institutions, identity solution developers, and digital service providers must act now. The cost of ignoring these critical factors can be catastrophic. Ensure your organization is protected by demanding transparency, rigorous benchmarking, and unwavering commitment to ethical AI from your face recognition technology providers.

By doing so, you safeguard not only your institution but also contribute to a more just and equitable technological landscape.

More News

More News