This article, featuring insights from Paravision’s Chief Product Officer, Joey Pritikin, was created as a part of the Kantara DeepfakesIDV discussion group, with contributions from industry experts, including Daniel Bachenheimer, and based on the earlier Presentation & Injection Attack Detection flowchart shared by Stephanie Schuckers, Director of the Center for Identification Technology Research (CITeR). Kantara’s DeepfakesIDV discussion group is a collaborative industry effort focused on addressing the threat of deepfakes and related attacks in digital ID verification systems.

Introduction

Remote identity verification systems face significant risks from the evolving threats of deepfake technology. Deepfakes, which involve the creation of highly realistic but fake audio, video, and still images, can be used to compromise these systems in various ways. Attackers may use deepfake technology to present falsified identities, modify genuine documents, or create synthetic personas, exploiting weaknesses in the verification process.

This summary explores the broad types of deepfake attacks, specific methods such as face swaps, expression swaps, synthetic imagery, and synthetic audio, and the various points of attack, including physical presentation attacks, injection attacks, and insider threats. Understanding these threats is crucial for developing robust defenses against the manipulation of identity verification systems.

Note: Deepfake attacks are a threat to a wide range of services, systems, and domains, from social and traditional media to national security and human rights to banking and access to digital systems. Here, we will focus specifically on deepfake threats and attack vectors in the scope of remote identity verification.

Types of Deepfake Attacks

Deepfakes are not “one thing,” but rather a class of digital face and voice manipulation and / or synthesis which can be used to undermine a remote digital identity scheme.

- Still Imagery Deepfakes:

- Face Swaps: Replacing a person’s face in a video with another person’s face, often seamlessly.

- StyleGAN2-Type Synthetic Imagery: Generating highly realistic human faces that do not belong to any real person.

- Diffusion-Based Imagery (e.g., Midjourney, Stable Diffusion): Creating realistic images from textual descriptions or other input images, making it possible to fabricate convincing identity photos.

- Audio Deepfakes:

- Synthetic Speech (e.g. Eleven Labs): Creating realistic synthetic voices using tools to impersonate someone during a voice verification process.

- Voice Cloning: Replicating someone’s voice to bypass voice authentication systems.

- Video Deepfakes (which may include a combination of the still imagery and audio techniques discussed above)

- Expression Swaps: Altering the facial expressions of a person in a video to match those of another person.

- Next-Gen Video Avatars (e.g. Synthesia, HeyGen): Creating fully synthetic avatars that can move and speak like real humans, making it hard to distinguish between real and fake identities.

Points of Attack

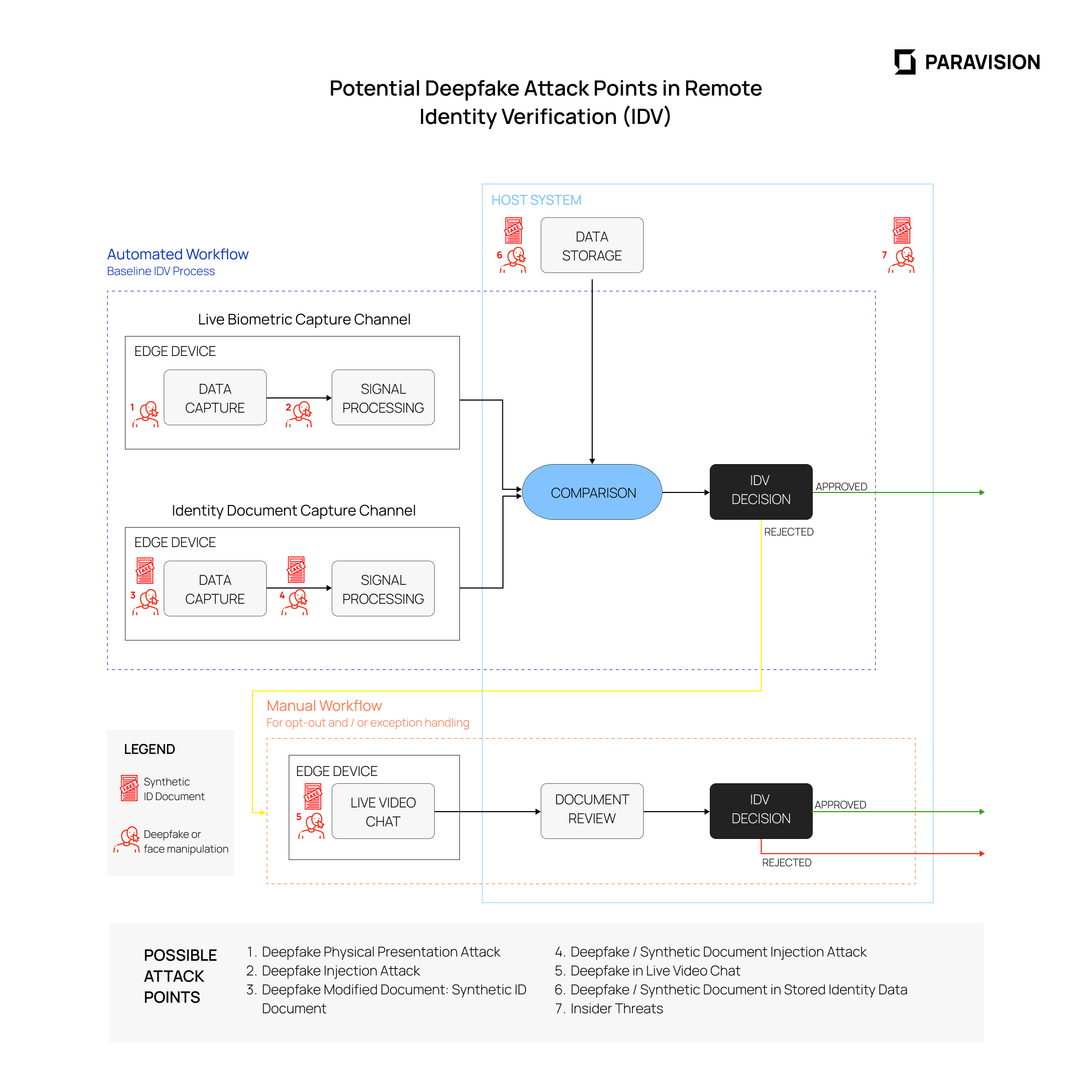

The following diagram summarizes key attack points for deepfakes in remote identity verification (IDV). It follows from earlier work on codifying possible presentation attack points in biometric systems as included in ISO/IEC 30107-1:2016 and other industry research.

Workflow Summary

This diagram provides an overview of a standard remote IDV process, including automated capture of biometrics (e.g., selfie photo) and identity documents. Capture of biometric and identity document data is followed by a biometric-to-document comparison and / or comparison with stored data in the host system. These comparisons are followed by an IDV decision.

If the automated IDV attempt is rejected or if a user opts-out of the automated check, the system will revert to a manual check. In the case of a manual review, the adjudicator may still use software tools to help ensure the authenticity of presented identity documents. In either case, if used, the manual IDV workflow will also result in acceptance or rejection.

Deepfake Attack Points

Deepfakes attacks are related in many ways to biometric presentation attacks, but are cause for concern in more than one capture channel in remote IDV. This diagram highlights three opportunities for deepfake attacks at front-end image capture:

- Automated Workflow – Live Biometric Capture: Deepfakes can either be used as a part of a physical presentation attack (point 1 in the diagram) or injected into the capture software interface (point 2 in the diagram).

- Automated Workflow – Identity Document Capture: Likewise, deepfakes can either be used as a face inserted within a physical document, or as a part of a fully synthetic (but physically manifested) document (point 3 in the diagram), or injected as a digital image or video into the capture software interface (point 4 in the diagram). It should be noted that systems which use a “photo bucket” or “photo picker” for identity document upload are particularly susceptible to attacks at point 4.

- Manual Workflow – Live Video Chat: As many IDV systems use a live video chat for manual reconciliation or support, this video channel becomes an attack point for injected video simulating a webcam (point 5 in the diagram).

In addition to these three front-end capture attack points, the host (backend) system may be susceptible to deepfake attacks as well, including:

- Stored Data: Deepfaked face images, deepfaked faces within identity documents, or fully synthetic identity documents may be stored as references in a backend system (point 6 in the diagram) due to lack of proper detection mechanisms at the time of original capture or ingestion.

- Insider Threats: The potential for human subversion of a host system (point 7 in the diagram) is an ever-present risk. Even if originally captured data was authentic, nefarious actors could replace face and / or identity document records with deepfakes if the proper protection and detection protocols are not in place.

Evolution of Deepfake Attacks

No doubt, while significant risks and attack vectors exist today, the threats are growing rapidly due to the remarkable pace of technology advancement, in particular with and around Generative AI.

- Improved Visual and Audio Quality: Deepfake technology is rapidly advancing, producing increasingly realistic and convincing fake content that is harder to detect.

- Personalization and Targeting: Deepfakes are becoming more sophisticated in mimicking specific individuals, making them more effective in targeted attacks.

- Integration with Other Attack Vectors: Deepfakes are being combined with other cyber attack methods, creating more complex and difficult-to-detect threats.

- Real-Time Manipulation: Advancements in processing power and algorithms are enabling real-time deepfake creation and manipulation, posing new challenges for detection.

- Scalability and Behavioral Mimicry: Deepfake technology is evolving to replicate not just appearance and voice, but also mannerisms and behaviors, making detection even more challenging.

Conclusion

Deepfake threats present significant challenges to remote identity verification systems. The broad range of attack vectors, from audio and video to still imagery, and the specific methods employed, such as face swaps, expression swaps, synthetic imagery, and synthetic audio, underscore the sophistication and potential impact of these threats. Points of attack span physical presentation, data injection, and live interactions, highlighting the need for robust security measures and continuous advancements in detection technologies to mitigate the risks posed by deepfakes.

More News

More News